This morning, the new release of the -Nikita -Noark 5 core project was -announced -on the project mailing list. The free software solution is an -implementation of the Norwegian archive standard Noark 5 used by -government offices in Norway. These were the changes in version 0.2 -since version 0.1.1 (from NEWS.md): +

+All the books I have published so far has been using +DocBook somewhere in the process. +For the first book, the source format was DocBook, while for every +later book it was an intermediate format used as the stepping stone to +be able to present the same manuscript in several formats, on paper, +as ebook in ePub format, as a HTML page and as a PDF file either for +paper production or for Internet consumption. This is made possible +with a wide variety of free software tools with DocBook support in +Debian. The source format of later books have been docx via rst, +Markdown, Filemaker and Asciidoc, and for all of these I was able to +generate a suitable DocBook file for further processing using pandoc, +a2x and asciidoctor, as well as rendering using +xmlto, +dbtoepub, +dblatex, +docbook-xsl and +fop.

+ +Most of the books I +have published are translated books, with English as the source +language. The use of +po4a to +handle translations using the gettext PO format has been a blessing, +but publishing translated books had triggered the need to ensure the +DocBook tools handle relevant languages correctly. For every new +language I have published, I had to submit patches dblatex, dbtoepub +and docbook-xsl fixing incorrect language and country specific issues +in the framework themselves. Typically this has been missing keywords +like 'figure' or sort ordering of index entries. After a while it +became tiresome to only discover issues like this by accident, and I +decided to write a DocBook "test framework" exercising various +features of DocBook and allowing me to see all features exercised for +a given language. It consist of a set of DocBook files, a version 4 +book, a version 5 book, a v4 book set, a v4 selection of problematic +tables, one v4 testing sidefloat and finally one v4 testing a book of +articles. The DocBook files are accompanied with a set of build rules +for building PDF using dblatex and docbook-xsl/fop, HTML using xmlto +or docbook-xsl and epub using dbtoepub. The result is a set of files +visualizing footnotes, indexes, table of content list, figures, +formulas and other DocBook features, allowing for a quick review on +the completeness of the given locale settings. To build with a +different language setting, all one need to do is edit the lang= value +in the .xml file to pick a different ISO 639 code value and run +'make'.

+ +The test framework +source code is available from Codeberg, and a generated set of +presentations of the various examples is available as Codeberg static +web pages at +https://pere.codeberg.page/docbook-example/. +Using this test framework I have been able to discover and report +several bugs and missing features in various tools, and got a lot of +them fixed. For example I got Northern Sami keywords added to both +docbook-xsl and dblatex, fixed several typos in Norwegian bokmål and +Norwegian Nynorsk, support for non-ascii title IDs added to pandoc, +Norwegian index sorting support fixed in xindy and initial Norwegian +Bokmål support added to dblatex. Some issues still remains, though. +Default index sorting rules are still broken in several tools, so the +Norwegian letters æ, ø and å are more often than not sorted properly +in the book index.

+ +The test framework recently received some more polish, as part of +publishing my latest book. This book contained a lot of fairly +complex tables, which exposed bugs in some of the tools. This made me +add a new test file with various tables, as well as spend some time to +brush up the build rules. My goal is for the test framework to +exercise all DocBook features to make it easier to see which features +work with different processors, and hopefully get them all to support +the full set of DocBook features. Feel free to send patches to extend +the test set, and test it with your favorite DocBook processor. +Please visit these two URLs to learn more:

-

-

- Fix typos in REL names -

- Tidy up error message reporting -

- Fix issue where we used Integer.valueOf(), not Integer.getInteger() -

- Change some String handling to StringBuffer -

- Fix error reporting -

- Code tidy-up -

- Fix issue using static non-synchronized SimpleDateFormat to avoid - race conditions -

- Fix problem where deserialisers were treating integers as strings -

- Update methods to make them null-safe -

- Fix many issues reported by coverity -

- Improve equals(), compareTo() and hash() in domain model -

- Improvements to the domain model for metadata classes -

- Fix CORS issues when downloading document -

- Implementation of case-handling with registryEntry and document upload -

- Better support in Javascript for OPTIONS -

- Adding concept description of mail integration -

- Improve setting of default values for GET on ny-journalpost -

- Better handling of required values during deserialisation -

- Changed tilknyttetDato (M620) from date to dateTime -

- Corrected some opprettetDato (M600) (de)serialisation errors. -

- Improve parse error reporting. -

- Started on OData search and filtering. -

- Added Contributor Covenant Code of Conduct to project. -

- Moved repository and project from Github to Gitlab. -

- Restructured repository, moved code into src/ and web/. -

- Updated code to use Spring Boot version 2. -

- Added support for OAuth2 authentication. -

- Fixed several bugs discovered by Coverity. -

- Corrected handling of date/datetime fields. -

- Improved error reporting when rejecting during deserializatoin. -

- Adjusted default values provided for ny-arkivdel, ny-mappe, - ny-saksmappe, ny-journalpost and ny-dokumentbeskrivelse. -

- Several fixes for korrespondansepart*. -

- Updated web GUI:

-

-

-

- Now handle both file upload and download. -

- Uses new OAuth2 authentication for login. -

- Forms now fetches default values from API using GET. -

- Added RFC 822 (email), TIFF and JPEG to list of possible file formats. -

+ - https://codeberg.org/pere/docbook-example/ +

- https://pere.codeberg.page/docbook-example/

The changes and improvements are extensive. Running diffstat on -the changes between git tab 0.1.1 and 0.2 show 1098 files changed, -108666 insertions(+), 54066 deletions(-).

- -If free and open standardized archiving API sound interesting to -you, please contact us on IRC -(#nikita on -irc.freenode.net) or email -(nikita-noark -mailing list).

+If you want to learn more on Docbook and translations, I recommend +having a look at the the DocBook +web site, +the DoCookBook +site and my earlier blog post on +how +the Skolelinux project process and translate documentation, a talk I gave earlier this year on +how +to translate and publish books using free software (Norwegian +only).

+ +As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address @@ -93,7 +132,7 @@ activities, please send Bitcoin donations to my address

@@ -101,111 +140,46 @@ activities, please send Bitcoin donations to my addressI have earlier covered the basics of trusted timestamping using the -'openssl ts' client. See blog post for -2014, -2016 -and -2017 -for those stories. But some times I want to integrate the timestamping -in other code, and recently I needed to integrate it into Python. -After searching a bit, I found -the -rfc3161 library which seemed like a good fit, but I soon -discovered it only worked for python version 2, and I needed something -that work with python version 3. Luckily I next came across -the rfc3161ng library, -a fork of the original rfc3161 library. Not only is it working with -python 3, it have fixed a few of the bugs in the original library, and -it has an active maintainer. I decided to wrap it up and make it -available in -Debian, and a few days ago it entered Debian unstable and testing.

- -Using the library is fairly straight forward. The only slightly -problematic step is to fetch the required certificates to verify the -timestamp. For some services it is straight forward, while for others -I have not yet figured out how to do it. Here is a small standalone -code example based on of the integration tests in the library code:

- -

-#!/usr/bin/python3

-

-"""

-

-Python 3 script demonstrating how to use the rfc3161ng module to

-get trusted timestamps.

-

-The license of this code is the same as the license of the rfc3161ng

-library, ie MIT/BSD.

-

-"""

-

-import os

-import pyasn1.codec.der

-import rfc3161ng

-import subprocess

-import tempfile

-import urllib.request

-

-def store(f, data):

- f.write(data)

- f.flush()

- f.seek(0)

-

-def fetch(url, f=None):

- response = urllib.request.urlopen(url)

- data = response.read()

- if f:

- store(f, data)

- return data

-

-def main():

- with tempfile.NamedTemporaryFile() as cert_f,\

- tempfile.NamedTemporaryFile() as ca_f,\

- tempfile.NamedTemporaryFile() as msg_f,\

- tempfile.NamedTemporaryFile() as tsr_f:

-

- # First fetch certificates used by service

- certificate_data = fetch('https://freetsa.org/files/tsa.crt', cert_f)

- ca_data_data = fetch('https://freetsa.org/files/cacert.pem', ca_f)

-

- # Then timestamp the message

- timestamper = \

- rfc3161ng.RemoteTimestamper('http://freetsa.org/tsr',

- certificate=certificate_data)

- data = b"Python forever!\n"

- tsr = timestamper(data=data, return_tsr=True)

-

- # Finally, convert message and response to something 'openssl ts' can verify

- store(msg_f, data)

- store(tsr_f, pyasn1.codec.der.encoder.encode(tsr))

- args = ["openssl", "ts", "-verify",

- "-data", msg_f.name,

- "-in", tsr_f.name,

- "-CAfile", ca_f.name,

- "-untrusted", cert_f.name]

- subprocess.check_call(args)

-

-if '__main__' == __name__:

- main()

-

-

-The code fetches the required certificates, store them as temporary -files, timestamp a simple message, store the message and timestamp to -disk and ask 'openssl ts' to verify the timestamp. A timestamp is -around 1.5 kiB in size, and should be fairly easy to store for future -use.

- -As usual, if you use Bitcoin and want to show your support of my -activities, please send Bitcoin donations to my address -15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b.

+ + +

+

+

+I 1979 leverte Ole-Erik Yrvin en hovedfagsoppgave for Cand. Scient. + ved Institutt for sosiologi på Universitetet i Oslo på oppdrag fra + Forbruker- og administrasjonsdepartementet. Oppgaven evaluerte + Angrefristloven fra 1972, og det han oppdaget førte til at loven ble + endret fire år senere.

+ +Jeg har kjent Ole-Erik en stund, og synes det var trist at hans + oppgave ikke lenger er tilgjengelig, hverken fra oppdragsgiver + eller fra universitetet. Hans forsøk på å få den avbildet og lagt + ut på Internett har vist seg fånyttes, så derfor tilbød jeg meg for + en stund tilbake å publisere den og gjøre den tilgjengelig med + fribruksvilkår på Internett. Det er nå klart, og hovedfagsoppgaven + er tilgjengelig blant annet via min liste over + publiserte bøker, både som nettside, + digital + bok i ePub-format og på papir fra lulu.com. Jeg regner med at + den også vil dukke opp på nettbokhandlere i løpet av en måned eller + to.

+ +Alle tabeller og figurer er gjenskapt for bedre lesbarhet, noen + skrivefeil rettet opp og mange referanser har fått flere detaljer + som ISBN-nummer og DOI-referanse. Selv om jeg ikke regner med at + dette blir en kioskvelter, så håper jeg denne nye utgaven kan komme + fremtiden til glede.

+ +Som vanlig, hvis du bruker Bitcoin og ønsker å vise din støtte til +det jeg driver med, setter jeg pris på om du sender Bitcoin-donasjoner +til min adresse +15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b. Merk, +betaling med bitcoin er ikke anonymt. :)

A few days, I rescued a Windows victim over to Debian. To try to -rescue the remains, I helped set up automatic sync with Google Drive. -I did not find any sensible Debian package handling this -automatically, so I rebuild the grive2 source from -the Ubuntu UPD8 PPA to do the -task and added a autostart desktop entry and a small shell script to -run in the background while the user is logged in to do the sync. -Here is a sketch of the setup for future reference.

- -I first created ~/googledrive, entered the directory and -ran 'grive -a' to authenticate the machine/user. Next, I -created a autostart hook in ~/.config/autostart/grive.desktop -to start the sync when the user log in:

- -- --[Desktop Entry] -Name=Google drive autosync -Type=Application -Exec=/home/user/bin/grive-sync -

Finally, I wrote the ~/bin/grive-sync script to sync -~/googledrive/ with the files in Google Drive.

- -

-#!/bin/sh

-set -e

-cd ~/

-cleanup() {

- if [ "$syncpid" ] ; then

- kill $syncpid

- fi

-}

-trap cleanup EXIT INT QUIT

-/usr/lib/grive/grive-sync.sh listen googledrive 2>&1 | sed "s%^%$0:%" &

-syncpdi=$!

-while true; do

- if ! xhost >/dev/null 2>&1 ; then

- echo "no DISPLAY, exiting as the user probably logged out"

- exit 1

- fi

- if [ ! -e /run/user/1000/grive-sync.sh_googledrive ] ; then

- /usr/lib/grive/grive-sync.sh sync googledrive

- fi

- sleep 300

-done 2>&1 | sed "s%^%$0:%"

-Feel free to use the setup if you want. It can be assumed to be -GNU GPL v2 licensed (or any later version, at your leisure), but I -doubt this code is possible to claim copyright on.

- -As usual, if you use Bitcoin and want to show your support of my -activities, please send Bitcoin donations to my address -15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b.

+ +Medlemmene av Norges regjering har demonstert de siste mÃ¥nedene at +habilitetsvureringer ikke er deres sterke side og det gjelder bÃ¥de +Arbeiderpartiets og Senterpartiers representater. Det er heldigvis +enklere i det private, da inhabilitetsreglene kun gjelder de som +jobber for folket, ikke seg selv. Sist ut er utenriksminister +Huitfeldt. I gÃ¥r kom nyheten om at +Riksadvokaten +har konkludert med at nestsjefen i Ãkokrim kan behandle sak om +habilitet og innsidekunnskap for Huitfeldt, pÃ¥ tross av at hans +overordnede, sjefen for Ãkokrim, har meldt seg inhabil i saken. Dette +er litt rart. I veilednigen +«Habilitet +i kommuner og fylkeskommuner» av Kommunal- og regionaldepartementet +forteller de hva som gjelder, riktig nok gjelder veiledningen ikke for +Ãkokrim som jo ikke er kommune eller fylkeskommune, men jeg fÃ¥r ikke +inntrykk av at dette er regler som kun gjelder for kommune og +fylkeskommune: + +

++ +«2.1 Oversikt over inhabilitetsgrunnlagene + +

De alminnelige reglene om inhabilitet for den offentlige +forvaltningen er gitt i +forvaltningsloven +§§ 6 til 10. Forvaltningslovens hovedregel om inhabilitet framgår +av § 6. Her er det gitt tre ulike grunnlag som kan føre til at en +tjenestemann eller folkevalgt blir inhabil. I § 6 første ledd +bokstavene a til e er det oppstilt konkrete tilknytningsforhold mellom +tjenestemannen og saken eller sakens parter som automatisk fører til +inhabilitet. Annet ledd oppstiller en skjønnsmessig regel om at +tjenestemannen også kan bli inhabil etter en konkret vurdering av +inhabilitetsspørsmålet, der en lang rekke momenter kan være +relevante. I tredje ledd er det regler om såkalt avledet +inhabilitet. Det vil si at en underordnet tjenestemann blir inhabil +fordi en overordnet er inhabil.»

+

Loven sier ganske enkelt «Er den overordnede tjenestemann ugild, +kan avgjørelse i saken heller ikke treffes av en direkte underordnet +tjenestemann i samme forvaltningsorgan.» Jeg antar tanken er at en +underordnet vil stå i fare for å tilpasse sine konklusjoner til det +overordnet vil ha fordel av, for å fortsatt ha et godt forhold til sin +overordnede. Men jeg er ikke jurist og forstår nok ikke kompliserte +juridiske vurderinger. For å sitere «Kamerat Napoleon» av George +Orwell: «Alle dyr er like, men noen dyr er likere enn andre».

It would come as no surprise to anyone that I am interested in -bitcoins and virtual currencies. I've been keeping an eye on virtual -currencies for many years, and it is part of the reason a few months -ago, I started writing a python library for collecting currency -exchange rates and trade on virtual currency exchanges. I decided to -name the end result valutakrambod, which perhaps can be translated to -small currency shop.

- -The library uses the tornado python library to handle HTTP and -websocket connections, and provide a asynchronous system for -connecting to and tracking several services. The code is available -from -github.

- -There are two example clients of the library. One is very simple and -list every updated buy/sell price received from the various services. -This code is started by running bin/btc-rates and call the client code -in valutakrambod/client.py. The simple client look like this: - -

-import functools

-import tornado.ioloop

-import valutakrambod

-class SimpleClient(object):

- def __init__(self):

- self.services = []

- self.streams = []

- pass

- def newdata(self, service, pair, changed):

- print("%-15s %s-%s: %8.3f %8.3f" % (

- service.servicename(),

- pair[0],

- pair[1],

- service.rates[pair]['ask'],

- service.rates[pair]['bid'])

- )

- async def refresh(self, service):

- await service.fetchRates(service.wantedpairs)

- def run(self):

- self.ioloop = tornado.ioloop.IOLoop.current()

- self.services = valutakrambod.service.knownServices()

- for e in self.services:

- service = e()

- service.subscribe(self.newdata)

- stream = service.websocket()

- if stream:

- self.streams.append(stream)

- else:

- # Fetch information from non-streaming services immediately

- self.ioloop.call_later(len(self.services),

- functools.partial(self.refresh, service))

- # as well as regularly

- service.periodicUpdate(60)

- for stream in self.streams:

- stream.connect()

- try:

- self.ioloop.start()

- except KeyboardInterrupt:

- print("Interrupted by keyboard, closing all connections.")

- pass

- for stream in self.streams:

- stream.close()

-The library client loops over all known "public" services, -initialises it, subscribes to any updates from the service, checks and -activates websocket streaming if the service provide it, and if no -streaming is supported, fetches information from the service and sets -up a periodic update every 60 seconds. The output from this client -can look like this:

- -- --Bl3p BTC-EUR: 5687.110 5653.690 -Bl3p BTC-EUR: 5687.110 5653.690 -Bl3p BTC-EUR: 5687.110 5653.690 -Hitbtc BTC-USD: 6594.560 6593.690 -Hitbtc BTC-USD: 6594.560 6593.690 -Bl3p BTC-EUR: 5687.110 5653.690 -Hitbtc BTC-USD: 6594.570 6593.690 -Bitstamp EUR-USD: 1.159 1.154 -Hitbtc BTC-USD: 6594.570 6593.690 -Hitbtc BTC-USD: 6594.580 6593.690 -Hitbtc BTC-USD: 6594.580 6593.690 -Hitbtc BTC-USD: 6594.580 6593.690 -Bl3p BTC-EUR: 5687.110 5653.690 -Paymium BTC-EUR: 5680.000 5620.240 -

The exchange order book is tracked in addition to the best buy/sell -price, for those that need to know the details.

- -The other example client is focusing on providing a curses view -with updated buy/sell prices as soon as they are received from the -services. This code is located in bin/btc-rates-curses and activated -by using the '-c' argument. Without the argument the "curses" output -is printed without using curses, which is useful for debugging. The -curses view look like this:

- -- -- Name Pair Bid Ask Spr Ftcd Age - BitcoinsNorway BTCEUR 5591.8400 5711.0800 2.1% 16 nan 60 - Bitfinex BTCEUR 5671.0000 5671.2000 0.0% 16 22 59 - Bitmynt BTCEUR 5580.8000 5807.5200 3.9% 16 41 60 - Bitpay BTCEUR 5663.2700 nan nan% 15 nan 60 - Bitstamp BTCEUR 5664.8400 5676.5300 0.2% 0 1 1 - Bl3p BTCEUR 5653.6900 5684.9400 0.5% 0 nan 19 - Coinbase BTCEUR 5600.8200 5714.9000 2.0% 15 nan nan - Kraken BTCEUR 5670.1000 5670.2000 0.0% 14 17 60 - Paymium BTCEUR 5620.0600 5680.0000 1.1% 1 7515 nan - BitcoinsNorway BTCNOK 52898.9700 54034.6100 2.1% 16 nan 60 - Bitmynt BTCNOK 52960.3200 54031.1900 2.0% 16 41 60 - Bitpay BTCNOK 53477.7833 nan nan% 16 nan 60 - Coinbase BTCNOK 52990.3500 54063.0600 2.0% 15 nan nan - MiraiEx BTCNOK 52856.5300 54100.6000 2.3% 16 nan nan - BitcoinsNorway BTCUSD 6495.5300 6631.5400 2.1% 16 nan 60 - Bitfinex BTCUSD 6590.6000 6590.7000 0.0% 16 23 57 - Bitpay BTCUSD 6564.1300 nan nan% 15 nan 60 - Bitstamp BTCUSD 6561.1400 6565.6200 0.1% 0 2 1 - Coinbase BTCUSD 6504.0600 6635.9700 2.0% 14 nan 117 - Gemini BTCUSD 6567.1300 6573.0700 0.1% 16 89 nan - Hitbtc+BTCUSD 6592.6200 6594.2100 0.0% 0 0 0 - Kraken BTCUSD 6565.2000 6570.9000 0.1% 15 17 58 - Exchangerates EURNOK 9.4665 9.4665 0.0% 16 107789 nan - Norgesbank EURNOK 9.4665 9.4665 0.0% 16 107789 nan - Bitstamp EURUSD 1.1537 1.1593 0.5% 4 5 1 - Exchangerates EURUSD 1.1576 1.1576 0.0% 16 107789 nan - BitcoinsNorway LTCEUR 1.0000 49.0000 98.0% 16 nan nan - BitcoinsNorway LTCNOK 492.4800 503.7500 2.2% 16 nan 60 - BitcoinsNorway LTCUSD 1.0221 49.0000 97.9% 15 nan nan - Norgesbank USDNOK 8.1777 8.1777 0.0% 16 107789 nan -

The code for this client is too complex for a simple blog post, so -you will have to check out the git repository to figure out how it -work. What I can tell is how the three last numbers on each line -should be interpreted. The first is how many seconds ago information -was received from the service. The second is how long ago, according -to the service, the provided information was updated. The last is an -estimate on how often the buy/sell values change.

- -If you find this library useful, or would like to improve it, I -would love to hear from you. Note that for some of the services I've -implemented a trading API. It might be the topic of a future blog -post.

+ +I still enjoy Kodi and +LibreELEC as my multimedia center +at home. Sadly two of the services I really would like to use from +within Kodi are not easily available. The most wanted add-on would be +one making The Internet Archive +available, and it has +not been +working for many years. The second most wanted add-on is one +using the Invidious privacy enhanced +Youtube frontent. A plugin for this has been partly working, but +not been kept up to date in the Kodi add-on repository, and its +upstream seem to have given it up in April this year, when the git +repository was closed. A few days ago I got tired of this sad state +of affairs and decided to +have +a go at improving the Invidious add-on. As +Google has +already attacked the Invidious concept, so it need all the support +if can get. My small contribution here is to improve the service +status on Kodi.

+ +I added support to the Invidious add-on for automatically picking a +working Invidious instance, instead of requiring the user to specify +the URL to a specific instance after installation. I also had a look +at the set of patches floating around in the various forks on github, +and decided to clean up at least some of the features I liked and +integrate them into my new release branch. Now the plugin can handle +channel and short video items in search results. Earlier it could +only handle single video instances in the search response. I also +brushed up the set of metadata displayed a bit, but hope I can figure +out how to get more relevant metadata displayed.

+ +Because I only use Kodi 20 myself, I only test on version 20 and am +only motivated to ensure version 20 is working. Because of API changes +between version 19 and 20, I suspect it will fail with earlier Kodi +versions.

+ +I already +asked to have +the add-on added to the official Kodi 20 repository, and is +waiting to heard back from the repo maintainers.

As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address @@ -436,7 +297,7 @@ activities, please send Bitcoin donations to my address

@@ -444,47 +305,57 @@ activities, please send Bitcoin donations to my addressBack in February, I got curious to see -if -VLC now supported Bittorrent streaming. It did not, despite the -fact that the idea and code to handle such streaming had been floating -around for years. I did however find -a standalone plugin -for VLC to do it, and half a year later I decided to wrap up the -plugin and get it into Debian. I uploaded it to NEW a few days ago, -and am very happy to report that it -entered -Debian a few hours ago, and should be available in Debian/Unstable -tomorrow, and Debian/Testing in a few days.

- -With the vlc-plugin-bittorrent package installed you should be able -to stream videos using a simple call to

- -- -It can handle magnet links too. Now if only native vlc had -bittorrent support. Then a lot more would be helping each other to -share public domain and creative commons movies. The plugin need some -stability work with seeking and picking the right file in a torrent -with many files, but is already usable. Please note that the plugin -is not removing downloaded files when vlc is stopped, so it can fill -up your disk if you are not careful. Have fun. :) - --vlc https://archive.org/download/TheGoat/TheGoat_archive.torrent -

I would love to get help maintaining this package. Get in touch if -you are interested.

+ +With yesterdays +release of Debian +12 Bookworm, I am happy to know the +the interactive +application firewall OpenSnitch is available for a wider audience. +I have been running it for a few weeks now, and have been surprised +about some of the programs connecting to the Internet. Some programs +are obviously calling out from my machine, like the NTP network based +clock adjusting system and Tor to reach other Tor clients, but others +were more dubious. For example, the KDE Window manager try to look up +the host name in DNS, for no apparent reason, but if this lookup is +blocked the KDE desktop get periodically stuck when I use it. Another +surprise was how much Firefox call home directly to mozilla.com, +mozilla.net and googleapis.com, to mention a few, when I visit other +web pages. This direct connection happen even if I told Firefox to +always use a proxy, and the proxy setting is ignored for this traffic. +Other surprising connections come from audacity and dirmngr (I do not +use Gnome). It took some trial and error to get a good default set of +permissions. Without it, I would get popups asking for permissions at +any time, also the most inconvenient ones where I am in the middle of +a time sensitive gaming session.

+ +I suspect some application developers should rethink when then need +to use network connections or DNS lookups, and recommend testing +OpenSnitch (only apt install opensnitch away in Debian +Bookworm) to locate and report any surprising Internet connections on +your desktop machine.

+ +At the moment the upstream developer and Debian package maintainer +is working on making the system more reliable in Debian, by enabling +the eBPF kernel module to track processes and connections instead of +depending in content in /proc/. This should enter unstable fairly +soon.

As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address 15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b.

+ +Update 2023-06-12: I got a tip about +a list of privacy +issues in Free Software and the +#debian-privacy IRC +channel discussing these topics.

+I continue to explore my Kodi installation, and today I wanted to -tell it to play a youtube URL I received in a chat, without having to -insert search terms using the on-screen keyboard. After searching the -web for API access to the Youtube plugin and testing a bit, I managed -to find a recipe that worked. If you got a kodi instance with its API -available from http://kodihost/jsonrpc, you can try the following to -have check out a nice cover band.

- -curl --silent --header 'Content-Type: application/json' \

- --data-binary '{ "id": 1, "jsonrpc": "2.0", "method": "Player.Open",

- "params": {"item": { "file":

- "plugin://plugin.video.youtube/play/?video_id=LuRGVM9O0qg" } } }' \

- http://projector.local/jsonrpcI've extended kodi-stream program to take a video source as its -first argument. It can now handle direct video links, youtube links -and 'desktop' to stream my desktop to Kodi. It is almost like a -Chromecast. :)

+ +There is a European standard for reading utility meters like water, +gas, electricity or heat distribution meters. The +Meter-Bus standard +(EN 13757-2, EN 13757-3 and EN 13757â4) provide a cross vendor way +to talk to and collect meter data. I ran into this standard when I +wanted to monitor some heat distribution meters, and managed to find +free software that could do the job. The meters in question broadcast +encrypted messages with meter information via radio, and the hardest +part was to track down the encryption keys from the vendor. With this +in place I could set up a MQTT gateway to submit the meter data for +graphing.

+ +The free software systems in question, +rtl-wmbus to +read the messages from a software defined radio, and +wmbusmeters to +decrypt and decode the content of the messages, is working very well +and allowe me to get frequent updates from my meters. I got in touch +with upstream last year to see if there was any interest in publishing +the packages via Debian. I was very happy to learn that Fredrik +Ãhrström volunteered to maintain the packages, and I have since +assisted him in getting Debian package build rules in place as well as +sponsoring the packages into the Debian archive. Sadly we completed +it too late for them to become part of the next stable Debian release +(Bookworm). The wmbusmeters package just cleared the NEW queue. It +will need some work to fix a built problem, but I expect Fredrik will +find a solution soon.

+ +If you got a infrastructure meter supporting the Meter Bus +standard, I strongly recommend having a look at these nice +packages.

As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address @@ -520,7 +404,7 @@ activities, please send Bitcoin donations to my address

@@ -528,19 +412,47 @@ activities, please send Bitcoin donations to my addressIt might seem obvious that software created using tax money should -be available for everyone to use and improve. Free Software -Foundation Europe recentlystarted a campaign to help get more people -to understand this, and I just signed the petition on -Public Money, Public Code to help -them. I hope you too will do the same.

+ +The LinuxCNC project is making headway these days. A lot of +patches and issues have seen activity on +the project github +pages recently. A few weeks ago there was a developer gathering +over at the Tormach headquarter in +Wisconsin, and now we are planning a new gathering in Norway. If you +wonder what LinuxCNC is, lets quote Wikipedia:

+ ++"LinuxCNC is a software system for numerical control of +machines such as milling machines, lathes, plasma cutters, routers, +cutting machines, robots and hexapods. It can control up to 9 axes or +joints of a CNC machine using G-code (RS-274NGC) as input. It has +several GUIs suited to specific kinds of usage (touch screen, +interactive development)." ++ +

The Norwegian developer gathering take place the weekend June 16th +to 18th this year, and is open for everyone interested in contributing +to LinuxCNC. Up to date information about the gathering can be found +in +the +developer mailing list thread where the gathering was announced. +Thanks to the good people at +Debian, +Redpill-Linpro and +NUUG Foundation, we +have enough sponsor funds to pay for food, and shelter for the people +traveling from afar to join us. If you would like to join the +gathering, get in touch.

+ +As usual, if you use Bitcoin and want to show your support of my +activities, please send Bitcoin donations to my address +15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b.

A few days ago, I wondered if there are any privacy respecting -health monitors and/or fitness trackers available for sale these days. -I would like to buy one, but do not want to share my personal data -with strangers, nor be forced to have a mobile phone to get data out -of the unit. I've received some ideas, and would like to share them -with you. - -One interesting data point was a pointer to a Free Software app for -Android named -Gadgetbridge. -It provide cloudless collection and storing of data from a variety of -trackers. Its -list -of supported devices is a good indicator for units where the -protocol is fairly open, as it is obviously being handled by Free -Software. Other units are reportedly encrypting the collected -information with their own public key, making sure only the vendor -cloud service is able to extract data from the unit. The people -contacting me about Gadgetbirde said they were using -Amazfit -Bip and -Xiaomi -Band 3.

- -I also got a suggestion to look at some of the units from Garmin. -I was told their GPS watches can be connected via USB and show up as a -USB storage device with -Garmin -FIT files containing the collected measurements. While -proprietary, FIT files apparently can be read at least by -GPSBabel and the -GpxPod Nextcloud -app. It is unclear to me if they can read step count and heart rate -data. The person I talked to was using a -Garmin Forerunner -935, which is a fairly expensive unit. I doubt it is worth it for -a unit where the vendor clearly is trying its best to move from open -to closed systems. I still remember when Garmin dropped NMEA support -in its GPSes.

- -A final idea was to build ones own unit, perhaps by basing it on a -wearable hardware platforms like -the Flora Geo -Watch. Sound like fun, but I had more money than time to spend on -the topic, so I suspect it will have to wait for another time.

- -While I was working on tracking down links, I came across an -inspiring TED talk by Dave Debronkart about -being a -e-patient, and discovered the web site -Participatory -Medicine. If you too want to track your own health and fitness -without having information about your private life floating around on -computers owned by others, I recommend checking it out.

+ +A bit delayed, +the interactive +application firewall OpenSnitch package in Debian now got the +latest fixes ready for Debian Bookworm. Because it depend on a +package missing on some architectures, the autopkgtest check of the +testing migration script did not understand that the tests were +actually working, so the migration was delayed. A bug in the package +dependencies is also fixed, so those installing the firewall package +(opensnitch) now also get the GUI admin tool (python3-opensnitch-ui) +installed by default. I am very grateful to Gustavo Iñiguez Goya for +his work on getting the package ready for Debian Bookworm.

+ +Armed with this package I have discovered some surprising +connections from programs I believed were able to work completly +offline, and it has already proven its worth, at least to me. If you +too want to get more familiar with the kind of programs using +Internett connections on your machine, I recommend testing apt +install opensnitch in Bookworm and see what you think.

+ +The package is still not able to build its eBPF module within +Debian. Not sure how much work it would be to get it working, but +suspect some kernel related packages need to be extended with more +header files to get it working.

As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address @@ -612,7 +493,7 @@ activities, please send Bitcoin donations to my address

@@ -620,28 +501,141 @@ activities, please send Bitcoin donations to my addressDear lazyweb,

- -I wonder, is there a fitness tracker / health monitor available for -sale today that respect the users privacy? With this I mean a -watch/bracelet capable of measuring pulse rate and other -fitness/health related values (and by all means, also the correct time -and location if possible), which is only provided for -me to extract/read from the unit with computer without a radio beacon -and Internet connection. In other words, it do not depend on a cell -phone app, and do make the measurements available via other peoples -computer (aka "the cloud"). The collected data should be available -using only free software. I'm not interested in depending on some -non-free software that will leave me high and dry some time in the -future. I've been unable to find any such unit. I would like to buy -it. The ones I have seen for sale here in Norway are proud to report -that they share my health data with strangers (aka "cloud enabled"). -Is there an alternative? I'm not interested in giving money to people -requiring me to accept "privacy terms" to allow myself to measure my -own health.

- + +While visiting a convention during Easter, it occurred to me that +it would be great if I could have a digital Dictaphone with +transcribing capabilities, providing me with texts to cut-n-paste into +stuff I need to write. The background is that long drives often bring +up the urge to write on texts I am working on, which of course is out +of the question while driving. With the release of +OpenAI Whisper, this +seem to be within reach with Free Software, so I decided to give it a +go. OpenAI Whisper is a Linux based neural network system to read in +audio files and provide text representation of the speech in that +audio recording. It handle multiple languages and according to its +creators even can translate into a different language than the spoken +one. I have not tested the latter feature. It can either use the CPU +or a GPU with CUDA support. As far as I can tell, CUDA in practice +limit that feature to NVidia graphics cards. I have few of those, as +they do not work great with free software drivers, and have not tested +the GPU option. While looking into the matter, I did discover some +work to provide CUDA support on non-NVidia GPUs, and some work with +the library used by Whisper to port it to other GPUs, but have not +spent much time looking into GPU support yet. I've so far used an old +X220 laptop as my test machine, and only transcribed using its +CPU.

+ +As it from a privacy standpoint is unthinkable to use computers +under control of someone else (aka a "cloud" service) to transcribe +ones thoughts and personal notes, I want to run the transcribing +system locally on my own computers. The only sensible approach to me +is to make the effort I put into this available for any Linux user and +to upload the needed packages into Debian. Looking at Debian Bookworm, I +discovered that only three packages were missing, +tiktoken, +triton, and +openai-whisper. For a while +I also believed +ffmpeg-python was +needed, but as its +upstream +seem to have vanished I found it safer +to rewrite +whisper to stop depending on in than to introduce ffmpeg-python +into Debian. I decided to place these packages under the umbrella of +the Debian Deep +Learning Team, which seem like the best team to look after such +packages. Discussing the topic within the group also made me aware +that the triton package was already a future dependency of newer +versions of the torch package being planned, and would be needed after +Bookworm is released.

+ +All required code packages have been now waiting in +the Debian NEW +queue since Wednesday, heading for Debian Experimental until +Bookworm is released. An unsolved issue is how to handle the neural +network models used by Whisper. The default behaviour of Whisper is +to require Internet connectivity and download the model requested to +~/.cache/whisper/ on first invocation. This obviously would +fail the +deserted island test of free software as the Debian packages would +be unusable for someone stranded with only the Debian archive and solar +powered computer on a deserted island.

+ +Because of this, I would love to include the models in the Debian +mirror system. This is problematic, as the models are very large +files, which would put a heavy strain on the Debian mirror +infrastructure around the globe. The strain would be even higher if +the models change often, which luckily as far as I can tell they do +not. The small model, which according to its creator is most useful +for English and in my experience is not doing a great job there +either, is 462 MiB (deb is 414 MiB). The medium model, which to me +seem to handle English speech fairly well is 1.5 GiB (deb is 1.3 GiB) +and the large model is 2.9 GiB (deb is 2.6 GiB). I would assume +everyone with enough resources would prefer to use the large model for +highest quality. I believe the models themselves would have to go +into the non-free part of the Debian archive, as they are not really +including any useful source code for updating the models. The +"source", aka the model training set, according to the creators +consist of "680,000 hours of multilingual and multitask supervised +data collected from the web", which to me reads material with both +unknown copyright terms, unavailable to the general public. In other +words, the source is not available according to the Debian Free +Software Guidelines and the model should be considered non-free.

+ +I asked the Debian FTP masters for advice regarding uploading a +model package on their IRC channel, and based on the feedback there it +is still unclear to me if such package would be accepted into the +archive. In any case I wrote build rules for a +OpenAI +Whisper model package and +modified the +Whisper code base to prefer shared files under /usr/ and +/var/ over user specific files in ~/.cache/whisper/ +to be able to use these model packages, to prepare for such +possibility. One solution might be to include only one of the models +(small or medium, I guess) in the Debian archive, and ask people to +download the others from the Internet. Not quite sure what to do +here, and advice is most welcome (use the debian-ai mailing list).

+ +To make it easier to test the new packages while I wait for them to +clear the NEW queue, I created an APT source targeting bookworm. I +selected Bookworm instead of Bullseye, even though I know the latter +would reach more users, is that some of the required dependencies are +missing from Bullseye and I during this phase of testing did not want +to backport a lot of packages just to get up and running.

+ +Here is a recipe to run as user root if you want to test OpenAI +Whisper using Debian packages on your Debian Bookworm installation, +first adding the APT repository GPG key to the list of trusted keys, +then setting up the APT repository and finally installing the packages +and one of the models:

+ ++curl https://geekbay.nuug.no/~pere/openai-whisper/D78F5C4796F353D211B119E28200D9B589641240.asc \ + -o /etc/apt/trusted.gpg.d/pere-whisper.asc +mkdir -p /etc/apt/sources.list.d +cat > /etc/apt/sources.list.d/pere-whisper.list <<EOF +deb https://geekbay.nuug.no/~pere/openai-whisper/ bookworm main +deb-src https://geekbay.nuug.no/~pere/openai-whisper/ bookworm main +EOF +apt update +apt install openai-whisper ++ +

The package work for me, but have not yet been tested on any other +computer than my own. With it, I have been able to (badly) transcribe +a 2 minute 40 second Norwegian audio clip to test using the small +model. This took 11 minutes and around 2.2 GiB of RAM. Transcribing +the same file with the medium model gave a accurate text in 77 minutes +using around 5.2 GiB of RAM. My test machine had too little memory to +test the large model, which I believe require 11 GiB of RAM. In +short, this now work for me using Debian packages, and I hope it will +for you and everyone else once the packages enter Debian.

+ +Now I can start on the audio recording part of this project.

+As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address 15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b.

@@ -649,7 +643,7 @@ activities, please send Bitcoin donations to my address @@ -657,67 +651,64 @@ activities, please send Bitcoin donations to my addressFor a while now, I have looked for a sensible way to share images -with my family using a self hosted solution, as it is unacceptable to -place images from my personal life under the control of strangers -working for data hoarders like Google or Dropbox. The last few days I -have drafted an approach that might work out, and I would like to -share it with you. I would like to publish images on a server under -my control, and point some Internet connected display units using some -free and open standard to the images I published. As my primary -language is not limited to ASCII, I need to store metadata using -UTF-8. Many years ago, I hoped to find a digital photo frame capable -of reading a RSS feed with image references (aka using the -<enclosure> RSS tag), but was unable to find a current supplier -of such frames. In the end I gave up that approach.

- -Some months ago, I discovered that -XScreensaver is able to -read images from a RSS feed, and used it to set up a screen saver on -my home info screen, showing images from the Daily images feed from -NASA. This proved to work well. More recently I discovered that -Kodi (both using -OpenELEC and -LibreELEC) provide the -Feedreader -screen saver capable of reading a RSS feed with images and news. For -fun, I used it this summer to test Kodi on my parents TV by hooking up -a Raspberry PI unit with LibreELEC, and wanted to provide them with a -screen saver showing selected pictures from my selection.

- -Armed with motivation and a test photo frame, I set out to generate -a RSS feed for the Kodi instance. I adjusted my Freedombox instance, created -/var/www/html/privatepictures/, wrote a small Perl script to extract -title and description metadata from the photo files and generate the -RSS file. I ended up using Perl instead of python, as the -libimage-exiftool-perl Debian package seemed to handle the EXIF/XMP -tags I ended up using, while python3-exif did not. The relevant EXIF -tags only support ASCII, so I had to find better alternatives. XMP -seem to have the support I need.

- -I am a bit unsure which EXIF/XMP tags to use, as I would like to -use tags that can be easily added/updated using normal free software -photo managing software. I ended up using the tags set using this -exiftool command, as these tags can also be set using digiKam:

- -- --exiftool -headline='The RSS image title' \ - -description='The RSS image description.' \ - -subject+=for-family photo.jpeg -

I initially tried the "-title" and "keyword" tags, but they were -invisible in digiKam, so I changed to "-headline" and "-subject". I -use the keyword/subject 'for-family' to flag that the photo should be -shared with my family. Images with this keyword set are located and -copied into my Freedombox for the RSS generating script to find.

- -Are there better ways to do this? Get in touch if you have better -suggestions.

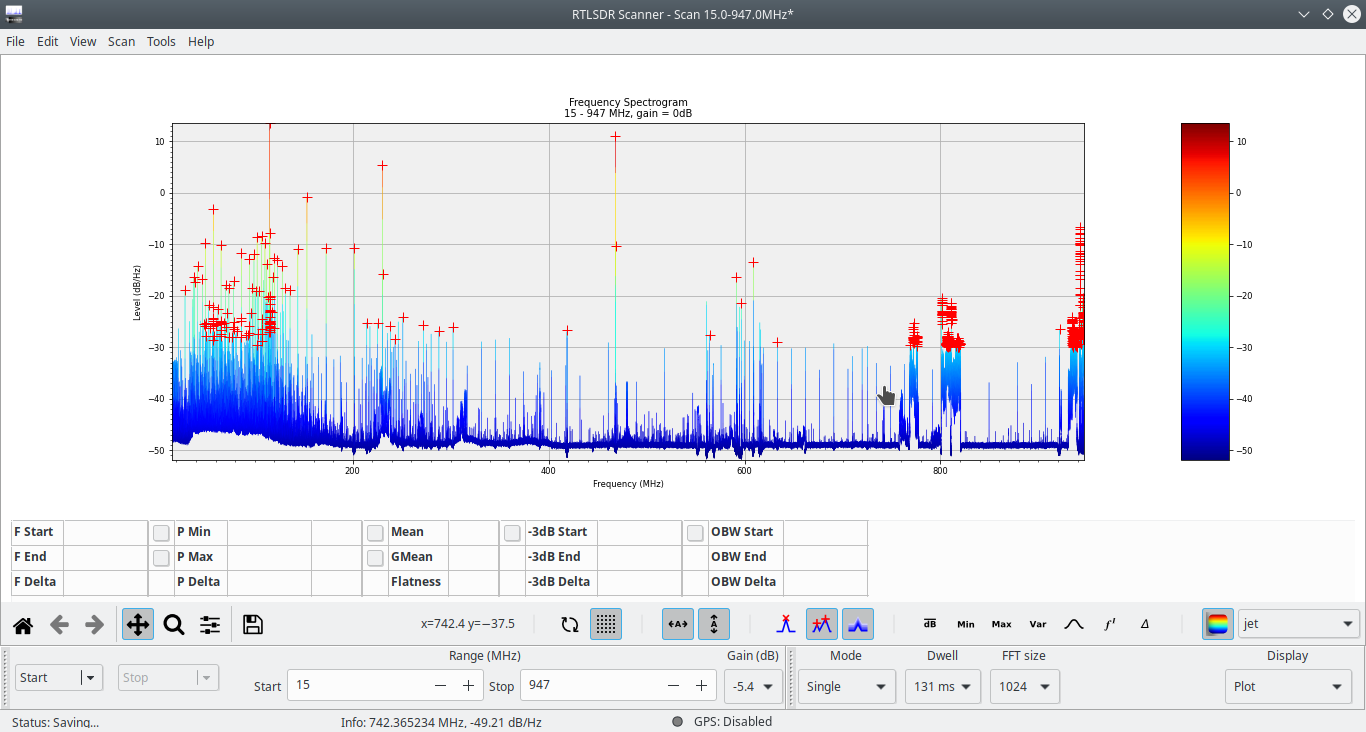

+ +Today I finally found time to track down a useful radio frequency +scanner for my software defined radio. Just for fun I tried to locate +the radios used in the areas, and a good start would be to scan all +the frequencies to see what is in use. I've tried to find a useful +program earlier, but ran out of time before I managed to find a useful +tool. This time I was more successful, and after a few false leads I +found a description of +rtlsdr-scanner +over at the Kali site, and was able to track down +the +Kali package git repository to build a deb package for the +scanner. Sadly the package is missing from the Debian project itself, +at least in Debian Bullseye. Two runtime dependencies, +python-visvis +and +python-rtlsdr +had to be built and installed separately. Luckily 'gbp +buildpackage' handled them just fine and no further packages had +to be manually built. The end result worked out of the box after +installation.

+ +My initial scans for FM channels worked just fine, so I knew the +scanner was functioning. But when I tried to scan every frequency +from 100 to 1000 MHz, the program stopped unexpectedly near the +completion. After some debugging I discovered USB software radio I +used rejected frequencies above 948 MHz, triggering a unreported +exception breaking the scan. Changing the scan to end at 957 worked +better. I similarly found the lower limit to be around 15, and ended +up with the following full scan:

+ + + +Saving the scan did not work, but exporting it as a CSV file worked +just fine. I ended up with around 477k CVS lines with dB level for +the given frequency.

+ +The save failure seem to be a missing UTF-8 encoding issue in the +python code. Will see if I can find time to send a patch +upstream +later to fix this exception:

++Traceback (most recent call last): + File "/usr/lib/python3/dist-packages/rtlsdr_scanner/main_window.py", line 485, in __on_save + save_plot(fullName, self.scanInfo, self.spectrum, self.locations) + File "/usr/lib/python3/dist-packages/rtlsdr_scanner/file.py", line 408, in save_plot + handle.write(json.dumps(data, indent=4)) +TypeError: a bytes-like object is required, not 'str' +Traceback (most recent call last): + File "/usr/lib/python3/dist-packages/rtlsdr_scanner/main_window.py", line 485, in __on_save + save_plot(fullName, self.scanInfo, self.spectrum, self.locations) + File "/usr/lib/python3/dist-packages/rtlsdr_scanner/file.py", line 408, in save_plot + handle.write(json.dumps(data, indent=4)) +TypeError: a bytes-like object is required, not 'str' ++

As usual, if you use Bitcoin and want to show your support of my activities, please send Bitcoin donations to my address 15oWEoG9dUPovwmUL9KWAnYRtNJEkP1u1b.

@@ -725,14 +716,14 @@ activities, please send Bitcoin donations to my address